Saturday, March 25, 2006

Moving Over To TypePad

http://enterprisearchitect.typepad.com

Allthough I moved my archive over to the new site I will leave this one up indefinitely. And things will look a bit spartan on the new site while I get set up...hope to see all of you there!

UPDATE: The move over to Typepad is essentially complete, and I am disabling comments and trackbacks from this site. All of the posts here are over on the new site, so if you're linking or trackbacking, please use the new site and post URLs as I will be taking this site down in the next couple of months. Thanks!

Tuesday, March 21, 2006

The Real 'Two-Dot-Oh Community' We Live In

James McGovern’s recent Ruby-on-Rails pieces touched off a range war between Ruby developers and himself, with some initial intermediation thrown in from certain industry analysts and EA bloggers. Unfortunately, predictably, and sadly, the ‘debate’ rapidly degenerated into ad hominem attacks on James in various contexts. Despite the fact that I found a good portion of James' commentary to be problematic, the personal attacks were unwarranted and uncalled for. Once commenters and certain bloggers started getting off topic and attacking James’ intelligence and experience wholesale, the whole thing decomposed into one gigantic shitstorm of little value to anyone. Sad.

The point of this post is not about Ruby, or any other programming language, design/development methodology, or technology component. Rather, I’m going to provide some perspective on this latest jihad and why the overblown two-dot-oh concept of ‘community’ needs a serious reality check because if the events of the last 4-5 days are a primary rationale of this so-called ‘community,’ I don’t want any part of it, and neither should you.

Religious wars over technology such as programming languages and operating systems are nothing new, and they’ve been going on for years. After the internet was invented, but before the web was, we had usenet newsgroups devoted to specific OS platforms, programming languages, and the like. Usenet and its newsgroups still exist today, albeit an extreme backwater to the blogosphere, discussion boards, and other modern forms of group communication over the internet.

The technical newsgroups were just as prone to insulting flame wars as blogs and blog comments are today. Some newsgroups self-corrected and righted themselves such that the signal-to-noise ratio returned to normal and constructive discourse eventually returned. Others never recovered and the key benchmark there was that only the combatants continued to post any material to the group, usually a content-free fusillade launched against the other side that was not worth reading; and all others had permanently abandoned the newsgroup. Eventually, even the combatants grew tired of their sneering nobody-wins-but-let-me-one-up-you-one-more-time game and moved on, most likely to other newsgroups to find another flame war to fight.

So, what’s changed about this ‘community’ between then and now? Other than the technologies, absolutely nothing. With rare exceptions, people act in their own self-interest, and they will continue to do so. When something outside of their worldview irks a group of folks, as James did with his Ruby posts (and yes, I found large portions of his content, while not hateful, ill-advised and not well thought-out), the chest-beating commenced, followed by the bullets whizzing by. Of particular interest to me were various disparaging comments in David Heinemeier Hansson's blog about architects and EA’s in general. To be expected? Sure. Can it ever be rectified to the satisfaction of all parties? Sadly, it would appear not.

Anne Zelenka warns us about fundamentalism in our approaches and I have blogged about holistic enterprise architecture viewpoints in the past. One cannot succeed as an architect by embracing one view of the world and showing disdain for all others. What gets me the most is all of the hue and cry over how we're building 'communities' (in the light of 'teaching the world to sing in perfect harmony' or 'Kum-By-Ah over the campfire') when the real motivation, as exposed in a recent Economist article appears to be primarily business-and-profit-oriented. Think of it this way, Oracle would not be trying to buy every open-source entity available if they didn't smell that sweet nectar of opportunity calling.

Here's a big lesson for the two-dot-oh crowd: if you can't have any sort of professional discourse over various internal technical issues, you won't be having them with paying customers either because you aren't capable of doing that. And perhaps the three-dot-oh mantra will be "We should listen better than we do now."

Don't know about all of the rest of you, but I'm not going to kill myself holding my breath waiting for that, because some things never change.

Saturday, March 18, 2006

Open Source Gets a Bit of a Wedgie

The piece takes a pretty substantial whack at the entire concept. After describing the movement in the software arena, the limitations of open source organizations are explored. An example:

"But the biggest worry is that the great benefit of the open-source approach is also its great undoing. Its advantage is that anyone can contribute; the drawback is that sometimes just about anyone does. This leaves projects open to abuse, either by well-meaning dilettantes or intentional disrupters. Constant self-policing is required to ensure its quality."

Then we move on to Wikipedia as an example, which has recently been blasted by others (notably Nicholas Carr) for the quality of the entries, and has moved toward much tighter control over its content and who may alter or add to it:

"This lesson was brought home to Wikipedia last December, after a former American newspaper editor lambasted it for an entry about himself that had been written by a prankster. His denunciations spoke for many, who question how something built by the wisdom of crowds can become anything other than mob rule."

The piece continues on about quality, intellectual property issues, and makes some very astute observations about the real motivation and outcomes of open source software projects:

"One reason why open source is proving so successful is because its processes are not as quirky as they may first seem. In order to succeed, open-source projects have adopted management practices similar to those of the companies they vie to outdo. The contributors are typically motivated less by altruism than by self-interest. And far from being a wide-open community, projects often contain at their heart a small close-knit group.

With software, for instance, the code is written chiefly not by volunteers, but by employees sponsored for their efforts by companies that think they will in some way benefit from the project. Additionally, while the output is free, many companies are finding ways to make tidy sums from it. In other words, open source is starting to look much less like a curiosity of digital culture and more like an enterprise, with its own risks and rewards."

An example given directly afterward is MySQL, which freely gives out its source code, but the development is completely done by paid programming staff financed by the approximately 8000 'customers' that pay MySQL directly for support and maintenance. That looks a lot more like a business to me, and much less of a 'community.'

The article continues in-depth about open-source's strengths and weaknesses, but what's apparent to me is that a meshing of open source concepts and trditional corporate governance and control is taking place. It is beginning to appear that its not a choice of one or the other, it will wind up being a combination that, hopefully, gives us the best of both. The hue and cry of 'community' is being drown out by the hybridism with corporate interests that has emerged.

I think the same thing will happen to the various elements of Web 2.0 as well.

open source

Web 2.0

enterprise architecture

Lousy Businesses and Technology

Quite a few of us work for or consult to such 'lousy' businesses. Oftentimes we (as IT) evangelize that the path away from lousy (short of selling out or closing down) is the implementation of new technology schemes. The mistake often made in these cases is that implementation and use of the technology itself will right the organization and lift it from lousy to good, or even great.

The mistake in thinking this is that these initiatives fail to provide the business with any competitive advantage in its markets or against its competitors. If IT is going to enable a shift away from lousy, any technology investment must:

- lower the cost of doing business in a manner that cannot be easily duplicated by competitors. If every market player gets the same, nearly-immediate advantage from a technology implementation, what's the point in doing it and spending the money?

- provide the basis for disruptive products and services that lift the business over its market and competition.

- furnish the data and capabilities that drive the insights of marketing and sales functions to exploit market and competitive inefficiences to the organization's advantage over other players.

And depending on the circumstances of your business and the marketplaces you serve, apply the above criteria liberally before issuing any purchase orders.

Thursday, March 09, 2006

Relationship-Building for Architects

- The CIO

- The CTO

- Your peer group of architects and senior IT management/technologists

- The project managers and PMO if you have one

- The development team leads and senior developers

- The business unit heads and sponsors you routinely deal with

- Members of non-IT business units you routinely deal professionally with

There are 2 direct ways to look at your business relationship with these stakeholders: what you get from them, and what you give to them. Here's an example of this with some of the constituancies listed above:

CIO - gives: Funding, Formal Approval, Strategic Direction; gets: Architecture

CTO - gives: Technology Strategic Direction/Standards,Thought Leadership; gets: Technical and Stategy Input/Advice

Project Managers - gives: Project-based Strategy, Leadership, Tracking/Status; gets: Buildable Architecture, Subject Matter Expertise

Development Team Leads - gives: Technical Leadership, Development Strategies, Development; gets: Functional Architecture Specifications, Interface/Data Specifications, Exchange Between Architecuture and Development Standards & Issues.

Business Sponsors/Unit Executives - gives: Funding, Approval, Requirements, Process Direction; gets: Working Software, Understandable Mapping of Technology Environment to Business Requirements and Processes

A couple of things to note before I continue - these are examples of stakeholder give & get relationships and if you attempt this exercise, the players roles and titles will most likely be different in name or function. And, I really wanted to put this in a table to make it clearer, but I didn't want to imbed a Word file in this post...if anybody has a good way to do this without a file and without changing blog sites, I'm all ears and grateful...just leave a comment to this post or e-mail me with my thanks in advance.

OK, back to our program. This exercise can also be enhanced by adding other data or intuitions such as 'perception of the IT organization,' 'perception of the EA function,' and others that are relevant and some kind of estimate low-to-high for each. Once you have completed this, then you can determine where to focus your energies with respect to building and maintaining/improving relationships and trust with the business and IT leadership and development.

I recommend doing a simple review like this at least once per year, and more often if there are signifcant areas for improvement. If you have, or can achieve, a relationship level with the stakeholders in which you are (or become) the trusted advisor or steward, it's going to make a number of aspects of your job as enterprise architect easier, and you can better achieve consensus or coalition-building to support initiatives you seek to develop and execute.

While this scheme may seem a bit simplistic, that's by design. You want simple truths like this so you can take appropriate action without much hand-wringing (and time-wasting) over what to measure, how to do it, and how often. We have more than enough complexity to deal with as it is, and getting a read on where we stand with stakeholders in a manner such as this provides quick benefits to the business, the IT organization, and ourselves.

Finally, this is not to be construed as a performance review, its more how the EA function (and by extension, you and your peer architects) are viewed by various stakeholders in the organization.

enterprise architecture

The Enterprise Architecture Definition Collection

Good Enterprise Architecture:

- Improves internal communications by providing a common language for describing how technology can support business initiatives.

- Helps companies link business and IT priorities by creating road maps for decisionmaking about technology initiatives.

- Helps reduce costs by encouraging technology standards throughout the organization, thus allowing IT to pinpoint trade-offs in project costs based on adherence to architectural requirements.

- Improves the quality of technology initiatives for business by easily explaining plans to a broad range of constituents.

Poor Enterprise Architecture:

- Enforces the use of technical terms and jargon that confuse both business and IT.

- Creates such a high level of detail for defining technology initiatives that decisionmaking is paralyzed.

- Requires a level of standardization that can potentially limit business-unit flexibility and speed to market.

- Has unrealistic goals for transition to new corporate technologies.

CIO Insight magazine (website), "Enterprise Architecture Fact Sheet"

Enterprise Architecture is an infrastructure and a set of Machines constructed in order to manage a chaotic, dynamic, unpredictable, complex, organic, prone to error, frustrating, Enterprise IT, which has to support an ever increasing, dynamic portfolio of products and services, through constant "ASAP, Now, Right-Away" modifications of business processes.

Muli Koppel, Muli Koppel's Blog, published February 22, 2006

Enterprise architecture (EA) refers to the manner in which the operations, systems, and technology components of a business are organized and integrated. It defines many of the standards and structures of these components and is a critical aspect of allowing capabilities and their supporting applications to develop independently while all work together as part of an end-to-end solution. An EA consists of several compenent architectures which often go by different names. Some of the common ones are: business/functional architecture; data/information architecture; applications/systems architecture; infrastructure/technology architecture; operations and execution architecture.

John Schmidt, David Lyle, Integration Competency Center: An Implementation Methodology, 2005, Informatica Corporation. Posted January 29, 2006.

Enterprise Architecture

Wednesday, March 08, 2006

Monetizing Web 2.0?

Two things jumped out at me immediately from their disclosures: a) they appear to generate a ton of traffic to and through their site; and b) they generate very little revenue. Gross revenue over the 17 months reported where $74,194,50 USD with an monthly average of $4364.38. Their best revenue month was in December, 2004 with $5872.50 USD.

I'm not a financial analyst, and I'm not going to second-guess what the founders should and should not have done with respect to the site and its services or content, but a gross revenue stream like this doesn't sustain a business very long, not to mention that their gross revenues have been trending downward since June, 2005. All of this from a site that claims 50,000 members, signs up 100-150 members per day, 1 million listings on Google, and an Alexa ranking of 4174?

What's sticking in my craw right now is this: despite the impressive site and traffic/hit numbers, the revenue issue more than suggests that there isn't a sustainable business here, and if there is, what am I missing? What could the founders (or a buyer) do differently to drive up revenues?

And that's a question that needs answers in lots of cases like this, because it won't be the last...

Web 2.0

Tuesday, March 07, 2006

Thoughts on Process and Expectations

The overall problems appear to me as attempts to strike a balance between results, process, and the expectations of users & the business. This balance is so tough to strike that few will truly succeed 100% in satisfiying all stakeholders, particularly on large projects.

Here are a few examples of perceptions/statements/mantras we all have been exposed to whether on-the-job or in publications and blogs:

- The systems and applications IT develops cost too much and take to long to implement.

- It costs too much to maintain operational IT systems (the 'keeping the lights on' part of the budget that is 70-80% of most IT expense).

- It costs too much because of, as Scott aptly states "waste...being ceremonial or overhead activities that do not directly add value."

- We can cut IT costs with an enterprise architecture (and indirectly, a SOA) that unifies and integrates applications and infrastructure

- We develop one-offs and small-project apps that the business requires quickly but are difficult to maintain and support, don't interoperate with anything else we have in production or on the whiteboard, and have some development activities that are repetitive (particularly data and database-related) as we need to re-model or re-engineer the data access and movement/integration because of 'unique' business requirements.

- We can implement and deploy faster if we continue using [fill in older technology here] rather than web services (and indirectly, a SOA), because that is what we know how to do without additional training or outside help.

- We can service-enable anything faster and cheaper using REST rather than WS-* because the latter is cumbersome, too complex, and more difficult to implement.

What further muddies the water here is SOA itself. We're finding out that comprehensive SOAs are not built quickly and cheaply, and definitely not quick or inexpensive enough to satisfy urgent business needs. Well-built SOAs will pay back much more than they cost - eventually, and not fast enough to satisfy some parts of the business.

Finally, we get to the question of "value" of any process, activity, or sets of these. The question behind this is 'value to whom?' Business agility is one thing, IT agility is another, and overall costs to develop, deploy, and support are yet another. What kind of balance are we looking for here, and can we achieve some sort of consensus on this with the stakeholders?

I realize I'm asking a few more questions in this post than I'm answering, and much more thought and dialog is required. Scott and Cote' have begun a very interesting topic...much more to follow.

enterprise architecture

Sunday, March 05, 2006

Various and Sundry Musings - 3/5/2006

Nick Carr thanks the Academy as he receives the award for "Best Performance in Curmudgeonly Role" for his sublime performance describing and commenting on the Web 2.0/Mashup-related "Camp Wars." Like Nick, I find it very difficult that certain individuals in the blogosphere wax rhapsodic about "community" with respect to mashups and Web 2.0 out of one side of their mouths and blast "competing" camps out of the other. Sad.

If you haven't seen the film "Walk the Line" about the late country music stars Johnny and June Carter Cash, by all means rent or buy it on DVD. Even if you aren't a fan of country music, the story is supurb and Johnny and June are wonderfully portrayed by Jaoquin Phoenix and Reese Witherspoon. A keeper.

Parts III and IV of my discussion about SOA and legacy systems will be out later this week. I apologize for the delay.

The continuing IT parody on the Fox TV show "24" (which Muli Koppel recently used to explain architecural points) continues to amuse me. I love the show and have watched it since the beginning 5 years ago, but the ability of the CTU organization to refactor systems and data, and alternatively to erase or lose it as the plot dictates, makes me laugh hard. Good thing its Hollywood and not truth...maybe...:)

Saturday, March 04, 2006

The Keys to Good Enterprise Architecture

As I mentioned previously, an enterprise architect's primary function is to look at both the business and IT (development in particular) in the abstract, a level or two or three away from the 'action' to ensure the following:

- The organization gets its process needs objectively met through information technology

- The resulting technical solutions are efficient, reliable, secure, cost-effective, and agile

- Specific segments of the business-technology interface in a given organization, while using commonly available technologies, represent a core competency or value-add unique to the organization that provides competitive and profitable differentiation from rivals.

The hue and cry of certain parts of the business (and other IT pundits at times) is that all enterprise architecture produces is nifty-looking diagrams in geek-speak that don't deliver much value to the business. On the other hand, development teams resent EA being an 'ivory-tower' operation dictating standards and guidelines from on-high with no appreciation of development issues and goals.

Each camp wants EA to focus on their issues, usually exclusively. Both camps miss the point because EA is properly practiced with a balance of abstraction of and between both sides.

Why are we the middleman in all this, and why can't we (as an organization) remove an expensive cost-center from the mix and use the money not spent on EA for something else?

The answer is the same for both sides of the two camps: it's too easy to get bogged down in details on either side and partially or completely miss various opportunities to improve business processes or systems under development (or the aggregation of them) because our noses are at the grindstones of business/systems analysis, development, or project management exclusively. It skews perspectives so much that on one hand, business processes cannot be optimized, nor can systems or infrastructure because we're too close to the root issues of the matter to see what can and will go awry.

That's partially what thinking in abstract terms is about. Take SOA as an example - are we thinking about what types of loosely-coupled services we are going to offer the business, or are we thinking about how precisely they will be implemented down to the actual code? Actually, we are thinking about both, but at a level or two above what the groups responsible for more-specific definition and execution are thinking. This is a check-and-balance activity that yields great benefits to the business in terms of agility and response to business, cost/time-to-market, and technical issues.

Finally (for now) there is the issue of producing artifacts for these abstractions. As with the balance of abstration necessary between technology and business, enterprise architects must communicate the architecture(s), rationale, and benefits to a broad audience in terms that the specific audience understands. Too many architects rely on a single artifact (or tightly-coupled set of them) to address multiple constituencies. The usual result is that the works are too dry and technical for the business; and not detailed or nuanced enough for IT.

Before I get to a guideline list of artifacts useful for various constituancies that architects routinely encounter, allow me to point out that documentation should be, as Aglists put it 'good enough' to communicate what needs to be said, and nothing further. Striving for perfection in documents costs too much time for the little additional value added, and if you produce an artifact that nobody reads (or will read) except you, I would revisit the decision to produce that work.

Here's a sample of artifacts from various abstractions that could be produced:

- The Business: Non-technical overview of the architecture with partcular emphasis on business initiative/process support, agility to meet changing demands, and efficiency both in time-to-market and costs.

- Developers and Other Technical IT Folks: Technically oriented functional overview of the architecture with the understanding that you are describing "working softwares" with emphasis on the plural rather than singular. Individual project architectures and designs are part of the development team activities with EA support and input. Take great care to describe how all of this - applications and infrastructure - work together. Discussion of any singular project or system generally produces 'silo' thinking and discussion, which should be avoided.

- Senior IT Management and Project Management/PMO: A combination of (1) and (2) above as necessary plus preliminary estimates of cost, time-to-market (design, develop, test, deploy) and resources necessary to execute.

enterprise architecture

Thursday, March 02, 2006

Why IT Can't and Won't Fix American Healthcare

Uh huh. Next hype-ridden statement, please...

A lot of trees, ink, and electrons get expended in the US telling us as a profession that proper application of IT here, there, globally, best-practiced, ad nauseum will 'fix' the American healthcare system.

The current system isn't fixable, by IT or anyone/anything else, herewith are some important reasons why - Robert Samuelson's commentary in the Washington Post a few weeks back making a vital point why this won't happen (at least under present conditions):

Americans generally want their health-care system to do three things: (1) provide needed care to all people, regardless of income; (2) maintain our freedom to pick doctors and their freedom to recommend the best care for us; and (3) control costs. The trouble is that these laudable goals aren't compatible.

We can have any two of them, but not all three.

What Samuelson describes here we would call a mismatch of requirements - the goals that 'the business' wants in this case, while laudible, aren't compatible with each other, or in context to the problem being solved or the process under development. When we design IT systems to respond to situations like this, the systems become as flawed as the processes and decision-making being supported.

We have, as Samuelson puts it, quite a conundrum: IT can certainly be used (and to an extent, has been) in a number of contexts to reduce or control costs. But that won't matter much if the other two factors are still present because reducing process-related expense won't counteract the explosion in demand for all services paid for, of course, by other entities like the government or insurance companies.

I can't tell you how many times I look at the admin areas of my doctor and dentist's offices and see shelf-after-shelf of color-coded file folders and wonder what the cost-basis and savings would be of all of that paper were digitized. It probably could be and should, but in the end given the above three constraints, will it matter in terms of improving care and the access of all to it?

Until we get our house in order with respect to expectations, if we ever do, there are no silver bullets to 'fix' our healthcare problems, including IT. And claims in our trade and professional magazines to the contrary are the most dangerous forms of hype and illusion that I've come across.

Healthcare IT

Wednesday, March 01, 2006

Dot-Bomb 2.0

Once again folks, we have the same-old same-old, circa 1998. Lots of VC cash swimming around trying to find a home and winding up in some edgy tag-mashups that will attract 500 competitors within days if they have any success at all.

I guess some people don't learn that, as Nick put it 'Edgeio enters a crowded market with a ton of pizzazz and a gram of strategy. Sound familiar? It should. It's what's engaved on the virtual gravestones of hundreds of dot-coms.'

There's money to be made in Web 2.0-land, but this isn't it. As I stated previously, we're in a sea-change with all of this, but it appears that we gotta let these Webvan- and Pets.com-redoux sites have their 15 minutes in the limelight before they take their inevitable dirt nap. Maybe it will come to a head quicker and less painfully this time around...

Nahh....

Web 2.0

Sunday, February 26, 2006

The Future of Lots of Things

Enterprise Architecture is an infrastructure and a set of Machines constructed in order to manage a chaotic, dynamic, unpredictable, complex, organic, prone to error, frustrating, Enterprise IT, which has to support an ever increasing, dynamic portfolio of products and services, through constant "ASAP, Now, Right-Away" modifications of business processes.

That is a formulation of my practical experience, as described in The Rise of the Machines: A new Approach to Enterprise Architecture. The outcome of an Enterprise Architecture is a Management Framework upon which, or rather, inside which, the Enterprise lives; physically.

Yet, if you consider the usual approach to Enterprise Architecture, you'd normally find committees, standards, governance, and smart people being in the confirmation pipe of business and technological initiatives.

Under this paradigm, the success of an EA Office is measured by the popularity of its staff members and of its guidelines and procedures.

I have always thought of EA in aggregate as the mapping of business processes and tactics to an optimal set of technology solutions that enable the business to carry out its initiatives. The value produced by EA 'done right' could be summarized as:

- The business gets its process needs objectively met through information technology

- The resulting technical solutions are efficient, reliable, cost-effective, and agile

- Specific segments of the business-technology interface in a given organization, while using commonly available technologies, represent a core competency or value-add unique to the organization that provides competitive and profitable differentiation from rivals.

Now we have SOA, mashups, and SaaS on the near horizon as requisite environments, at a minimum a major portion of the technology environment in the next few years. I dismiss out-of-hand that any or all of these will replace EA, as none of these fully embrace the business-technology relationship in total. However, there is something lurking on the horizon related to these technologies that will become evident in the next few years, and the best term I can give to it now is the rise of what I will call 'Meta-Architectures.'

What's just over the horizon for all of us is the ability of users to precisely and individually define their applications look, feel, and results simply by clicking or drag-and-dropping combinations of SaaS'es or mashups in any manner that make sense to them and running it on their devices. Just like they do now with files and text/data objects in a desktop environment. It will not take the abilities or time of the IT organization to change applications, or data to meet this need, the users will just point to services they want, defined in terms they understand, combine them as they see fit, and the interoperability between what they select is a given as part of the services offered.

Moreover, given the current state of technologies such as .NET and the C# language, it is certainly possible that services, to a limited extent now but more so going forward, will be able to significantly refactor themselves logically per user directives and execute. As with the previous example, it will not take the time or talent of the IT organization to respond to changes like this - the capabilities will come with the systems and be user-inititiated without specific IT intervention or permission.

While we've heard a lot of this in the past in different contexts - things like COBOL and SQL being invented so users could easily write software or query databases and artificial intelligence able to mimic and potentially replace human expertise and decision-making, I think that the new paradigms have some real 'legs' in what I've described above, and I'm usually a noted skeptic about such things. I may be dead-wrong on this forecast, but I feel that these paradigms and techhnologies, properly deployed and managed, have the greatest potential over anything I've come across in my career.

Which leads to Muli's further discussion about EA evolving into this:

I believe these thoughts will finally change the perception of both EA and SOA: EA will shake off its image as a "documentation, procedures and guidelines" body, repositioning itself as a practical, implementation-oriented discipline aimed at the creation of an Enterprise Management Infrastructure, while SOA will be repositioned, no longer as an Integration/Interoperability architecture, but rather as an Enterprise Management architecture.

Corresponding to what I have described above, the type of EA shift Muli describes is precisely what EA must evolve into if it is to survive and add value to our organizations. One feature of the meta-architecures I described above is that they are controlled by the users, and not by IT. IT loses control of the combinations that make up the meta architectures once the underlying services are deployed because users build and execute off service combinations without the need for detailed requirements, extensive documentation, and development. IT will not have the resources or the expertise to 'police' user service combinations, merely the ability to monitor and track usage and respond to and solve fault conditions. IT manages the service environment and ensures the quality and security of services, creates new environments and services, and becomes much more process-oriented than in the past. It is the combinations (or in other words integration and interoperability) that it cedes to the business, thereby giving the organization the agility and flexibility so long sought after.

It is a facinating and important time to be in this business folks, and these concepts are still being fleshed out by others and myself. More to come on this soon.

enterprise architecture

Web 2.0

SOA

Saturday, February 25, 2006

My Take on Resumes and Hiring

I'm going to take a different tack...if you're looking for a new position, or in certain extreme cases, paying work period, and all you have going is your resume on Dice, Monster, ComputerJobs, and the other sites, you're only going halfway, at best.

Why? Because I'm convinced that 99% of the great jobs out there aren't on these services or in the help wanted ads. They're only available from people you already know or need to meet. Yeah, that's right, you're going to need to get off your butt and start networking. Professional association meetings and conferences are great avenues for this. If you've kept in touch with folks from previous jobs and gigs, let them know you're looking (discreetly if you must, but let them know). Other professionals that you know socially are good too, as they may know someone else more aligned to what you're looking for and will make an introduction. It takes time and effort, but the payoffs can be huge compared to the job listings you and everybody else sees on the internet.

Scott and JT made lots of great points about resume mechanics and interviewing which I won't repeat here. I'm going to focus more on content of that document and interviewing:

- A recruiter who placed me some years back that I remain friendly with remarked to me last month that 'everybody is an architect' these days: Data Architect (not modeler); Application Architect (not developer); System Architect (not development team lead); Network Architect (not network engineer). That, in my mind, is stretching things a bit. If I see 'Architect' on your resume I can guarantee that I will be asking you a lot of architecture-related questions and if the answers I receive lead me to believe that you haven't interfaced much with the business, negotiated requirements with users, or performed higher-level strategic and tactical design that functionally specifies things for developers and the data people, well, thanks, we'll get back to you...

- I'm technology- and methodology-agnostic, and most good-to-great architects are also. I like to have a large toolkit at my disposal and select what fits well in any particular situation. If you start shouting fire-and-brimstone about any specific technology, 'best practices' (ugh), open source, or how Agile will save the world as we know it, I will politely decline the Kool-Aid that you're serving and move on to the next candidate. Resumes and interviews are not the time to get all evangelical. I'm more interested in learning how you think, how you handle setbacks, and how you get work done efficiently.

- I don't care (and neither does anyone else) what you did 10 years ago, or 15 or 20 for that matter. You wouldn't believe the chronological resumes I've seen with experience listed all the way back to high school in the early 1970s. Stick to the last 5-8 years. If anything before that is possibly relevant, mention it last in a catch-all paragraph.

- The same thing goes for your technical skills. The fact that you did batch COBOL programming only dates you as an older worker, and doesn't give you an additional arrow in your quiver to impress me that you can peform the work that you're interviewing for.

- I see too many resumes where the experience description for the positions held list the projects and what the project tried to accomplish. I'm not much interested in the project you worked on. However, I'm very interested in what you did on those projects, not your team, your boss, or your group/organization.

- I will ask you in an interview to describe a project you worked on or effort you made that failed - partially, completely, and miserably are all OK for response fodder. If you've worked for a substantial length of time in IT, you've been part of at least one of these turkeys. I will ask you what you did to correct the situation (even if it didn't work), what you learned from the experience, and how it influences your work and thoughts now. If you've got more than, say, 4-5 years of professional experience and you can't (or won't) describe an event like this, I'll deduce that either you're not being straight-up with me or you haven't done what your resume says you did somewhere. Your prognosis for the job I'm interviewing you for becomes fatal in that case.

- If you currently (or have in the past) speak and/or write on professional topics, by all means, list it. Same goes for blogging if the blog is relevant, although I doubt that Mini-Microsoft will be listing his on a resume anytime soon...:) Speaking of Mini, anybody notice lately that his/her blog has basically degenerated into a bitch-fest for MS employees? Mini throws out the raw meat for a few sentences or paragraphs, and the piling on in the comments commences immediately. I read the comments more than his stuff now...lol

- Beyond a BS or BA degree, your academic credentials are primarily important to HR, and perhaps senior management. Assuming that you have relevant paid professional experience, that matters more than your post-graduate education.

- Although you may be (or have in the past) working in a complete hellhole, don't ever, ever, ever trash your current or former employer(s) in your resume or in interviews. You will immediately get deep-sixed without remorse or guilt on the part of the hiring authority. The time to reveal these 'learning experiences' is after you're hired and after you get the lay of the land in the new environment.

- You've had 4 jobs in 4 years, and, as JT notes, you're not a consultant? You have a big problem. I don't know how you go about fixing it other than: a) become a consultant or contractor; or b) get in somewhere, somehow and stay put. And by all means, fix the problem that caused this situation, because it won't go away unless you do something to address it.

- It is very, very easy and inexpensive to run background checks on anyone these days. As such, bald-face lies about employment, academic credentials, any criminal history, etc. will be outed in short order. Don't do it.

- Treat your professional references like family. I'm very protective of mine. If you apply for a lot of contract positions, the time to reveal your references is not when the recruiter calls asking for your resume. It's when you've had an interview with the client, and then provide them directly to the client, not to the body shop. What happens if you reveal your references to every recruiter that contacts you is that they call your references, and its not to talk about you. Rather, its to drum up more business for them. That can get highly annoying to your references if you're on a substantial job search and they're getting 5-10 calls a day from recruiters dropping your name and asking for business. In fact, they usually become your ex-references shortly after such an experience. And don't fall for the crap recruiters give you that they can't present your resume without the references. They certainly can, and will if you're qualified, but will lie to you just to see if you'll capitulate. Legit recruiters never do this, and terminate conversations with those that do.

- Same thing goes for your Social Security number and other personal information. The time to reveal all of that is when you get a serious, legit offer in writing. Not before.

- Finally, what counts the most in a job search is getting in front of the people in direct position to hire you. That isn't HR, and never was. If you can get an 'in' within some organization (back to the networking again), you will be miles ahead of any competitors for the position. HR only can hire HR people, but some $12-per-hour HR clerk with no clue about what the work we do can screen you out so that the real hiring authority never sees your credentials. That's why networking and getting to know as many good professional folks as well as you can is so valuable.

Friday, February 24, 2006

The Walls of Sanity

The Wall of Hype: This seems to have calmed down a bit but it also might just be moving around. Web 2.0 hype does seem to have diminished in the face of some withering anti-hype and the hype cycle has moved more to Web 2.0-related developments like mashups and the latest round of Web 2.0 startups. Nevertheless, Web 2.0 promotion continues unabated in certain circles along with the anti-hype and if you're not following closely, you don't know what to believe:. Whether Web 2.0 is the next generation of the Web, or if it's snake oil. If it's the future of software, or just a marketing gimmick. I will give you my point of view one last time; Web 2.0 is real. And for that good reason, and some not so good ones, there is a lot of hype surrounding it.

Anything, technology-related or otherwise, is going to grab attention if it's talked about and promoted enough. This is no exception, and there are players who recognize an opportunity (or two or three) here. The hype will eventually sort itself out sooner rather than later, and like the dot-com bust, it will be 'put up or shut up' time in short order. If the whole thing doesn't survive, parts of it most certainly will, and those parts remain open to speculation at this point.

The Wall of Complexity: If you look at the Wired post above it has a particularly complex diagram in it. I actually drew that in order to create a pretty compehensive view of most of the moving parts in Web 2.0. There are a lot and it's hard to figure out where to start as a user, much less a software designer. The good news is that the good exemplars (Flickr and del.icio.us) and some of our approaches (like Ajax), actualy (sic) make it pretty obvious what you're supposed to do. But it's still very hard and what still not conveyed very well is the sense of balance and proportion required. In other words, you're not supposed to pile every single one of these Web 2.0 ingredients into the cake, bake it, and sell it to the nearest Web software giant. It doesn't work that way. There is a constant feedback loop with your users on the Web that guide you to in a close collaboration to add/remove features and capabilitgies (sic) while dynamically shaping and reshaping the product into what it needs to be at any given time.

Here is precisely where trouble lurks. Agility is a great and necessary capability and we must all move toward, if not embrace it. However, the enemies of agility are complexity and instability, both of which take many forms, and both of which can and have been successfully addressed in the past. As I have remarked previously, feedback loops that consist mostly of letting users test 'production' code hastily rushed onto sites and APIs will eventually (and probably quickly) lead to disaster.

Call me 'old school,' but I expect applications and websites that I work with to pretty much operate and look the same day-in and day-out. New features? Sure, but if you're radically changing my 'experience' every half-hour while I'm trying to get something important accomplished, I'm going to be a bit miffed if what you've pushed to your site or API interferes with or prevents what I'm trying to do or see or worse, causes all or parts of your site/API to fail. And if that continues, it will eventually drive me to one of your many competitors that will provide the level and consistency of service that I'm expecting (and, most likely, paying for).

Consider this alternative scenario: That's not counting what our developer buddy down the hallway is also pushing to our site, most likely without our knowledge. Since 'regression testing" doesn't seem to appear in the Web 2.0 lexicon (heck, 'testing' appears minimally), its a good bet that once we mash his changes with ours, our online stuff goes catatonic or takes a dirt nap until it occurs to either or both of us that the fast changes stepped on each other and/or the rest of our systems. Better go find last night's backup and pray we didn't lose another user to our competitors, and believe me, if we offer anything of significance to the community-at-large, there will be many to choose from...

The Wall of Significance. Is Web 2.0 a major new revolution in the way software is created and used? Probably. But there's a lot of stuff to learn, especially about the softer aspects of online systems like collaboration and social software. A lot of software developers, architects, and designers, more comfortable with the precise, exact parts that comprise software, are often pretty unhappy about this. Unfortunately for them, these aspects are probably here to stay, but they aren't sure. The competition for users, attention, and marketshare means you have to increasingly dangle the most effective engagement mechanisms or people will go elsewhere. And because we're human, there are few more powerful draws that building a sense of ownership and community. But in these early days, it's hard to tell if there really is a fundamental shift in first order software design, or just a passing wave of faddish affectation. Those of you who read this blog know where I stand, but it's hard for everyone to appreciate the significance of all this yet.

Well Dion, what hasn't changed a bit is that at a fundemental level, computers and networks still operate the same way they did in the past with respect to amplifying the imprecisions and mistakes humans routinely make in development, testing, and control of release to production. Ajax and web services can't help you there, my friend, and won't. What does help is a disciplined approach to all of these issues. While we have the capability to make changes on-the-fly (and looking back, always have although exercising it has been a choice dependent on circumstances), optimizing development and testing processes to shorten time-to-production and reach the ownership/community goals you seek are effective only in the context of providing consistent user experience, quality and security.

I agree that it's too early to tell whether or not we're in a total paradigm shift or a fad. I suspect at this point that it's a bit of both, and there is much more to observe and learn.

Web 2.0

Wednesday, February 15, 2006

Just Because Its Possible, Doesn't Mean Its Good

Marc wrote about software revisions as follows:

"Gone are the days of 1.0, 1.1, and 1.3.17b6. They have been replaced by the '20060210-1808:32 push'. For nearly all of these companies, a version number above 1.0 just isn't meaningful any more. If you are making revisions to your site and pushing them live, then doing it again a half hour later, what does a version number really mean? At several companies I've met, the developers were unsure how they would recreate the state of the application as it was a week ago -- and they were unsure why that even matters."

I'll echo a comment made directly to this post that the developers in question have never heard about change management controls and versioning. There are plenty of tools to accomplish revision control out there that work very well, and here's a real good reason why it's necessary: if somebody's 1/2-hour revision blows up and brings about an outage or severe loss of functionality for any reason, going back to the last-known-good revision in live production, and backing out the change is the first order of business in getting the system back on-line.

I also wonder how these folks regression test their systems before turning them live, and even with an automated test suite I doubt that any system of substance can be revised and completely tested in a half-hour.

That's assuming that these folks know what regression testing is in the first place, and why it matters.

Moving on to QA and testing:

"Developers -- and users -- do the quality assurance: More and more startups seem to be explicitly opting out of formalized quality assurance (QA) practices and departments. Rather than developers getting a bundle of features to a completed and integrated point, and handing them off to another group professionally adept at breaking those features, each developer is assigned to maintain their own features and respond to bug reports from users or other developers or employees. More than half of the companies I'm thinking of were perfectly fine with nearly all of the bug reports coming from customers. "If a customer doesn't see a problem, who am I to say the problem needs to be fixed?" one developer asked me. I responded, what if you see a problem that will lead to data corruption down the line? "Sure," he said, "but that doesn't happen. Either we get the report from a customer that data was lost, and we go get it off of a backup, or we don't worry about it." Some of these companies very likely are avoiding QA as a budget restraint measure -- they may turn to formal QA as they get larger. Others, though, are assertively and philosophically opposed. If the developer has a safety net of QA, one manager said, they'll be less cautious. Tell them that net is gone, he said, and you'll focus their energies on doing the right thing from the start. Others have opted away from QA and towards very aggressive and automated unit testing -- a sort of extreme-squared programming. But for all of them, the reports from customers matter more than anything an employee would ever find."

Wow, what a bad, bad move. Hope that they don't tell the CFO what types of risks they're taking betting the company like that. Lose the customer's data and get it off a backup? Fine. But what happens when the data was, inadvertently or otherwise, leaked to someone who isn't supposed to have it? Forget about some taggy Google Maps mashup...what about financial, credit, SSN, and medical records data? Forget about QA at this point after the government and trial lawyers pick over what's left of the carcasses of companies that 'test' this way.

Closer to the point, a guy named Boris Beizer wrote a software testing and QA book back in 1984 that, while it's out-of-print, has stood the test of time as a seminal, definitive work on software QA that is still applicable today, regardless of the technologies employed. In it, he stated one primary tenet of software testing: developers are poor resources to use in testing efforts, particularly of their own code, since they have an inherent bias towards their work that cannot be overcome nor relied upon to find substantial bugs in their code. This wasn't a slam on developers, as Beizer simply and correctly pointed out the natural biases we all have about our work, and software development isn't any different than other professions. And the Web as a computing platform doesn't change this tenet one iota.

In fact, the Web as a massive computing platform is no different than any other information system that came before it, with the requisite needs for adequate and substantial testing, revision and change management, and proper risk-mitigation techniques in production code and systems. Yes, the processes and techniques can certainly be optimized for speed and feature deployment, but thouroughness and the mitigation of outage, security, and data risks are still necessary no matter what new tools and techniques (or, as it appears, the lack of them) are engaged.

Finally, it appears that everything old is new again...we used to call 'eternal betas' prototypes back in the day....:)

Web 2.0

software testing

Converting Legacies to SOA: Part II

Some legacy systems are too large or obtuse to make a meaningful comparison in the manner I'm presenting here. Systems that fall into this category are usually third-party COTS packages (in which case one hopes the vendor has or is contemplating a service-enabled offering) or huge enough where the cost/time scope would be prohibitive - large ERP, supply chain, CRM, and HR packages come to mind. Again, since the majority of systems like this are third-party/outsourced, anything but the most trival services will most likely be provided by the vendors and their partners.

What we're doing in this portion of the exercise is to arrive at some reasonable estimates of what it takes in time and money to significantly refactor (or completely redesign) the legacy systems with service-enablement added. This exercise is important for the analysis in Part III that follows because we will be making comparisions between the labor, time, and money expended to redesign versus the cost of maintaining the legacy systems in their current state.

Yes, this is a bit of a project management exercise, but the style of project management (agile or process-oriented) isn't of interest here. How long and how much is what we're after. In all cases, whether you interate the development, testing, and deployment or engage in process-oriented techniques, the resulting system must have equivalent functionality and features as the ones it is replacing. As such, I haven't accounted for enhancements or alterations in the underlying business processes, which are separate issues.

The basics are as follows:

- Develop a strawman refactoring or re-design of each legacy system with service enablement. If you decide to consolidate a number of legacies with respect to infrastructure (e.g. consolidating database servers) make sure to allocate time and costs proportionally across each system. The strawman architectures don't need to have large amounts of detail. They only need to be good enough to allow you to make reasonable estimates of time to implement and costs.

- Once the strawman architecture is complete, then develop a preliminary task list that would be executed in sequence for each legacy system project.

- Assign timeframes to each task or task group.

- Estimate costs in labor, software licenses, and other project expenditures.

Strawman Architectures: It's easy to get bogged down and do a full-scale design of the legacy systems, and the analysis outcomes are going to be heavily influenced by the approach taken in this regard. My advice here is that you want answers to the overarching issues of legacy system retirement as soon as possible, so do enough design and modeling to get a good handle on what's going to be required with respect to time and cost. My take here is that this shouldn't take weeks per legacy system, but a few days to a week depending on complexity. Get help from your fellow architects and brainstorm it if you need to.

I'm assuming here that the SOA and other service-enablement issues have already been established or decided upon in your organization. If they haven't, it makes this entire exercise very nearly moot and you need to decide those issues and direction first before proceeding further.

Task Lists: Once the strawman architectures are complete, what it takes to implement and deploy the redesigned systems must be estimated from a task/work perspective. In project management-speak, this is known as developing a Work Breakdown Structure, or WBS. If your organization follows process-oriented project management styles, the WBS looks like a waterfall-model, start-to-finish single iteration. In an agile scenario, the iterations are mapped individually until you're comfortable that the proposed WBS retains the functionality being replaced or refactored. The amount of task detail that you develop is your decision, but the more comprehensive it is, the more sound your final analysis and estimates will be. Again, its easy to get bogged down in details and trivialities that sap time with little or no return on the final analysis, so I suggest that the task depth be kept managable and completed within a reasonable period of time.

An important thing to keep in mind at this step is that work breakdown structures are only concerned with tasks, not interdependencies or available resources. That comes later on and if you try to do two or three of these at once, you'll get bogged down with the complexity. Focus only on tasks at this point.

Timeframes: Now that you have a task structure for each legacy system, its time to make estimates of how long the tasks will take. For our purposes, time estimates take two forms:

- The amount of labor to accomplish a task regardless of schedule. This is known in project management-speak as effort.

- The calendar time required to perform the tasks. This is known as duration.

Cost Estimation: Now we're ready to get an estimate of the costs involved...

- From your task time estimates in the previous step, take the total time that was assigned for each task, add them up, and multiply by the burdened labor cost factor you obtained in Part I. This will give you your esimated labor costs.

- All software costs - licenses, support and maintenance contracts, etc. should be tallied up. I have not included hardware and network costs in this analysis because these issues are usually too broad to accurately address in a generic model. If you can comfortably estimate them and want to use them, then do so.

- Any other project-related expenses unique or specific to your organization and situation must be included.

After you've completed this section, total 1-3 above up for each system to arrive at the overall cost estimate.

Now that we've collected our data and performed preliminary design and scope, now we're ready to analyze what we have and where we should go in Part III to follow.

Monday, February 13, 2006

Converting Legacies to SOA: Don't Put Lipstick on a Pig

I'll be blunt: almost every IT organization has a few fragile, schizophrenic, resource-draining legacy systems in their shop. You know, the ones that always suffer outages, especially on weekends and holidays. The ones that take a battalion of developers and support people to maintain. The ones who have numerous and lengthy break-fix cycles. The ones that exist on hardware older than my teenage daughters. The ones that chew through IT expense budgets with high maintenance and support costs. And usually, they're still crucial to the business even though they should have been long ago retired and replaced with something else.

Well, sticking some type of service-enabled front-end on systems like this isn't going to make things any better. In fact, it could make things much worse operationally and financially for your IT shop because you're adding additional complexity to a system that already has numerous problems. While like many of you I find the siren song of agile, efficient, and cost-effective services very appealing, I have concerns that certain types of legacy systems are not suitable for service-enablement, never will be, and need refactoring or complete redesign. The trick here is to ferret these systems out before you attempt to service-enable them.

OK, I promised you strategy, and I'm pleased to give all of you one over the next few posts. To begin, you're going to need to get your hands on some data and facts, or 'educated' WAGs if such data isn't available or incomplete....

Overview of the Process

Part I: Legacy System Data or WAGs You'll Need

Part II: Refactoring/Redesign with Service-Enablement Project Data

Part III: Analysis

Part IV: Decision from Analysis

Part I: Data or WAGs You'll Need

For a given legacy system over the last 24 months (longer if you have the data, shorter if not...the more you have, the better the forecast/estimate will turn out):

- Percentage of uptime over the period

- Number (not percentage) of outages that had a severity of 'moderate' or higher (that is, subtract 'outages' that still allowed the majority of the system to function and be usable)

- Amount of staff hours supporting system (help desk & other support)

- Amount of staff hours maintaining system other than user support (break-fix work or developer/administrator maintenance that isn't user support)

- Amount of staff hours designing and developing enhancements, new features and subsystems, refactoring existing code, porting, integrations, etc. These aren't related to break/fix and maintenance described above. Make sure to include all development cycle activites including requirements, specification, testing, etc.

- Amount spent on annual maintenance agreements for all commercial software that the system utilzes. If a commercial software resource is shared with other systems, pro-rate this cost to each system as you choose and assign costs appropriately.

- The burdened cost of labor for your organization or even better, for your IT department. This value is a figure representing what it costs per hour for your company to employ someone, including salary, benefits, employer-paid taxes, and overhead for facilities, office equipment, etc. For most large organizations, this value usually falls between $80 and $125 USD per hour. If you don't know this value, ask your financial people if they have it, or for an estimate. For the purposes of this exercise, use one generic value even if your financial people have this number broken down by job or location categories, as it will make the analysis much easier and give you nearly the same results.

Friday, February 10, 2006

Requiem for a Legacy Architect

Our man is in his mid-50s and has been in the IT business his entire career. He ascended through the programming ranks to lead teams and design major systems for his employer. His development experience was in structured programming languages such as FORTRAN and C on big old DEC hardware like VAX-11's (which, back in the day, were pretty awesome machines even though P4 PCs pack much more punch at 1/1000th the size...:)).

However, over the years as he gained more responsibility as a non-management-level architect, he grew resistant to newer technologies and ways of accomplishing development and testing. For him, it was as if time needed to stand still and the systems he knew well and had a hand in developing would stand the test of time and be in production forever.

More troubling is how he spent his working days, which largely consisted of going to meetings that in large part either consisted of the same topics over-and-over each week (what I call 'Groundhog Day' meetings) or full of technical minutae and trivialities. While the Groundhog-style meetings are largely indicative of his organization's culture, the latter set he needn't have attended at all, so his focus could be a bit more dealing with the lines of business and doing more architectural-related work (which his organization badly needed, and still does).

He also developed a nickname amongst the development staff: 'Dr. No,' after the villian in the James Bond movie. He liked to say 'no' a lot to new ideas and technologies. Web-based applications? No. Web services? No. Replace extremely aged, 30-year-old systems that, while they still work, are fragile and will permanently break any moment? No. Since he is an architect in the organization, management solicits and respects his opinions enough where he has enormous sway in decision-making on projects like this.

Lately, however, the organization hired a CTO who, after shaking his head at the state of affairs within the production architecture, is moving to upgrade and consolidate a large number of systems, servers, and networks. All of which freaked our man out to the point where he spends an inordinate amount of time with all of us blasting these decisions and proclaiming that the initiatives 'will never work' in addition to wasting large sums of money.

The reasons for his behavior became clear to me a couple of weeks ago when he returned from a week of training. I asked him what he went off-site for...

Me: How was the class?

Him: I went to a C# programming class. It didn't go very well.

Me: Why not?

Him: I never understood object-oriented programming, so I didn't get what was being presented in the course.

Then it hit me: the man is a control freak, and if he doesn't understand (or want to understand) something, it's bad because he feels that he loses any control he has if he can't or won't take the time to understand newer technology and issues.

Since 'structured programming' had more or less bit the dust some years ago and object-orientation is pretty much standard when using the terms 'programming' or 'programming languages,' you can tell how out-of-date (and out of touch) this man really is.

If architects and CTOs are going to succeed in their careers long-term, they must operate in what I call constant learning mode, which includes business as well as technologies. Another attribute successful architects and CTOs have is the ability to successfully refactor themselves career-wise when technology and business changes mandate them. The rest eventually get left in the dust, and unfortunately for our boy, he's recently been getting tuned-out by higher-ups and others are coming to the fore to drive technology development in the organization.

If you've got people like this in your organization, you're operating with a 100-pound weight strapped to your back. Even the time it takes to rebuff (or in extreme cases, usurp) people like this is much better spent getting some real work done. While time must always be allotted to playing organizational politics, the ROI on constantly dealing with guys like this is too low, but the price usually must be paid in any event until the situation comes to a head and a resolution is reached.

While it's sad to see this man flounder (or worse, go on another tirade) when topics like SOA and open-source come up, there is hope for guys like him if management and you play your cards right - and no, that doesn't necessarily mean giving him a pink slip. If these people have any worth left to the organization, have them oversee legacy operations and maintenance or even better, if they have excellent knowledge of your business, start dealing exclusively with the business as some sort of IT liason, with the decision-making they were formerly responsible for safely in the hands of you as enterprise architect, your systems & data architects, and your CTO if you have one.

And as the years pass by, don't let this happen to you.

Stuff I'm Reading - February 2006

My system is short and sweet: I separate titles into geek and non-geek divisions and a simple rating system:

- Yeah Baby! - Buy this and read ASAP.

- Worth the $ - some minor quibbles, but take a good, long look

- Get from Library - got a few ideas or nuggets, but don't buy

- @$#)(&^! - author is entitled to his/her opinion, but I disagree with most if not all of the text

- Crap - Self-explanatory. Avoid.

Non-Geek Tomes

Geoffrey Moore, "Dealing with Darwin: How Great Companies Innvoate at Every Phase of Their Evolution," Portfolio, 2005. Moore, the author of Inside the Tornado, Crossing the Chasm, and Living on the Fault Line, truly gets innovation (as does Clayton Christianson) and technology markets. In this volume, he makes and justifies Charles Darwin's theory of natural selection in terms of business and markets: there are complimentary processes in business that determine which survive and which don't. There are huge lessons in this book for business process and IT folks with respect to alignment and support of innovation.

Moore defines the word "core" (with respect to a business) as a concept used to describe differentiating innovation: "To succeed with core, you must take your value proposition to such an extreme that competitors either cannot or will not follow. That's what creates the separation you seek."

The case study is about Cisco, and Moore offers Cisco's leadership to followers market strategy as:

"Specifically, the other major players in the ecosystem must voluntarily embrace your platform. Knowing how much power this confers on another company, why would these companies ever do this? The answer is three-fold:

1. They get enormous productivity gains from leveraging your services.

2. They get access to a much broader marketplace.

3. They do not perceive the power you gain coming at their expense.

"Cisco's plan is to deliver on all three points....[It] seeks to leverage its own location advantage by providing services that are noncore to its major partners."

Hmmm...the entrepreneurs amongt us in the SaaS/Mashup spaces might want to pick this book up and digest thouroughly. Others involved with enterprise architecture and business processes will gain enormous insights also, particularly on how to organize tactical thoughts and plans against business strategies. Rating: Yeah, Baby!

Geek Tomes

John Schmidt, David Lyle, "Integration Competency Center: An Implementation Methodology," Informatica Corporation, 2005. Short (153 pages) but highly organized book on IT integration strategies and the context between enterprise architecture and the business as well as integration issues. Kind of a mish-mash between framework/methodologies and research notations from others. Very useful in that they go a step further off of frameworks and discuss implementation (including defining the dreaded 'integration hairball'), but not far enough for those who want huge amounts of detail. A well done piece of work, but I wonder why a software vendor published this (granted, with no plugs or ads) and not a mainstream publishing house. Rating: Worth the $

Tuesday, February 07, 2006

Working Outages

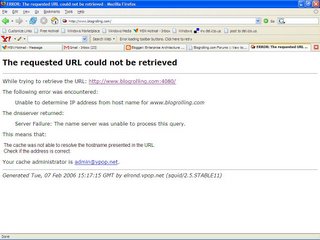

Well, something has happened today to reinforce my points. Those of you that are frequent visitors to my blog know that I use blogrolling.com to list links to other IT and architecture blogs that I read and that you will hopefully find interesting also. They should appear on the left under the 'Links' header. I check my blogs every day as regular user before posting anything, and this morning, my blogroll disappeared inexplicably. Perhaps as you read this, it has returned, but at the time I posted this, it hasn't.

Here's what you get when entering blogrolling.com's URL at the current time:

Don't know what else to call this other than a hardcore outage. No explanation or redirection, no notice, no nothing. The basic services offered by this site are free, but the advanced ones aren't. What about the paying customers?

You might be thinking 'blogroll.com...so what?' Replace 'blogroll.com' with 'criticaldata.com' or 'customerservice.com' or 'taxreturn.com' or 'emergencymedicalrecords.com' and let's see how important reliability and security become as we move to the new paradigms.

No amount of agility or claims of 'working software' fixes the issues of reliability, security, and transparency. We have to design all of that in up front in architecture, regardless of what methods or practices we use.

Sunday, February 05, 2006

More on Mashups and SaaS

Mashups, SaaS, Web 2.0, whatever we're calling distributed & combined services this week, promotes agility and flexibility. Reconfigure or redesign, basically, at will. It makes the web one giant OS or platform with wiki-like ability to access and update data.

Flexibile and efficient. Who wouldn't want something like this as opposed to the monolithic apps we've built for decades? I sure would, and so would a lot of people I know.

And that very flexibility could be the great undoing of Web 2.0, if caution is thrown to the wind with respect to security and operational effectiveness. Right now, I've got a big problem with data security in schemes like this. For example, I'm not as much concerned about personal data flying over the ether as I am that it lands somewhere - in a database - where it shouldn't be. I'm just waiting for the first service or SaaS spoof incident to come forth. And that's not if folks, but when.

Finally, we get to a conundrum I've thought about but haven't had anything substantial crystallize as yet: we have always been taught that single-points-of-failure are bad, bad, bad. Made sense then for various reasons, and continues to make sense today. In a services scheme, SPF's come part-and-parcel because the uniqueness-of-service concept overrides wide distribution of a service. A basic or me-too service would most likely reside on multiple provider URLs and not be subject to SPF issues. However, as the recent salesforce.com outages reveals, dependent entities are largely dead-in-the-water if the dependency on services or SaaS apps is large enough to shut down significant portions of the subscriber's business. Not good at all.

Agility will never trump security and robustness as the latter two present hugely unacceptable risks to organizations. If we're going to do Web 2.0 right, these issues deserve as much consideration and effort as the agile mashing of services and the building of them do.

Saturday, February 04, 2006

Mashups and Outsourced Services: Caution for Now

'Dion Hinchcliffe thinks that 80% of enterprise applications could be provided by external services, which is a great equalizer for smaller businesses that don't have huge IT budgets, and could almost completely disconnect the issue of business agility from the size of your development team. I think that it's time for some hard introspection about what business that you're really in: if your organization is in the business of selling financial services, what are you doing writing software from scratch when you could be wiring it together using BPM and the global SOA that's out there?'

Wonderful scenario, but there have been a couple of events over the last few weeks that should give one pause before immediately going forth and downsizing the development teams: Salesforce.com's outages and subsequent commentary. In a nutshell, salesforce.com suffered a severe outage in their CRM site a few weeks ago...extending out to hours, and a minor one sometime after that.

While no system can exhibit 100% uptime, what's more troublesome is the "I'm peeing on your leg and telling you that its raining" response by Salesforce's management to the, as they characterized it, 'minor' outages suffered. Nick Carr covered the specifics of the outages and other commentary about them here.

This situation illustrates the primary concerns currently I have with SOA and SaaS, particularly when the major distribution of services comes from outside an organization: reliability, with secondary issues about orchestration and scale/performance.

Dion's scenario will occur eventually (and should), but we're on a curve right now where there are going to be repeated incidents of this type over the next few years. The trick will be what kinds of trust level and transparency ensues with outages and more importantly, how they're handled by SaaS suppliers. Anything else other than brutal honesty and better performance down the road smells only like hucksterism and hype.

Which are in large quantity at the moment in the SOA and SaaS segments of our industry.

Thursday, February 02, 2006

Is it Scope Creep, or a Negotiation?

The standard colloquialism "gathering requirements" is a misnomer, as it gives the appearance that we scoop up or harvest requirements from the business much like a farmer reaps a harvest some length of time after planting and tending to his crops.

In real life, requirements are not often gathered, they're negotiated, and a good deal of those negotiations occur after the 'requirements gathering' phase of projects have passed. I have unscientifically polled students with IT backgrounds in my project management courses posing the question "How many of you have had any project in your career where 100% of the requirements were known up front and that's exactly what was delivered at the end of the project?"

The answer, of course, is zero. Requirements are always continuously refined and/or negotiated as development proceeds. Perhaps we should call the often-dreaded moniker 'scope creep' with what it actually is in reality: 'continuous negotiation.'

Wednesday, February 01, 2006

Google - SELL NOW!